- 460

- 15 506 373

Yannic Kilcher

Switzerland

Приєднався 18 лис 2013

I make videos about machine learning research papers, programming, and issues of the AI community, and the broader impact of AI in society.

Twitter: ykilcher

Discord: ykilcher.com/discord

BitChute: www.bitchute.com/channel/yannic-kilcher

LinkedIn: www.linkedin.com/in/ykilcher

BiliBili: space.bilibili.com/2017636191

If you want to support me, the best thing to do is to share out the content :)

If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this):

SubscribeStar: www.subscribestar.com/yannickilcher

Patreon: www.patreon.com/yannickilcher

Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq

Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2

Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m

Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n

Twitter: ykilcher

Discord: ykilcher.com/discord

BitChute: www.bitchute.com/channel/yannic-kilcher

LinkedIn: www.linkedin.com/in/ykilcher

BiliBili: space.bilibili.com/2017636191

If you want to support me, the best thing to do is to share out the content :)

If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this):

SubscribeStar: www.subscribestar.com/yannickilcher

Patreon: www.patreon.com/yannickilcher

Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq

Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2

Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m

Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n

ORPO: Monolithic Preference Optimization without Reference Model (Paper Explained)

Paper: arxiv.org/abs/2403.07691

Abstract:

While recent preference alignment algorithms for language models have demonstrated promising results, supervised fine-tuning (SFT) remains imperative for achieving successful convergence. In this paper, we study the crucial role of SFT within the context of preference alignment, emphasizing that a minor penalty for the disfavored generation style is sufficient for preference-aligned SFT. Building on this foundation, we introduce a straightforward and innovative reference model-free monolithic odds ratio preference optimization algorithm, ORPO, eliminating the necessity for an additional preference alignment phase. We demonstrate, both empirically and theoretically, that the odds ratio is a sensible choice for contrasting favored and disfavored styles during SFT across the diverse sizes from 125M to 7B. Specifically, fine-tuning Phi-2 (2.7B), Llama-2 (7B), and Mistral (7B) with ORPO on the UltraFeedback alone surpasses the performance of state-of-the-art language models with more than 7B and 13B parameters: achieving up to 12.20% on AlpacaEval2.0 (Figure 1), 66.19% on IFEval (instruction-level loose, Table 6), and 7.32 in MT-Bench (Figure 12). We release code and model checkpoints for Mistral-ORPO-α (7B) and Mistral-ORPO-β (7B).

Authors: Jiwoo Hong, Noah Lee, James Thorne

Links:

Homepage: ykilcher.com

Merch: ykilcher.com/merch

UA-cam: ua-cam.com/users/yannickilcher

Twitter: ykilcher

Discord: ykilcher.com/discord

LinkedIn: www.linkedin.com/in/ykilcher

If you want to support me, the best thing to do is to share out the content :)

If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this):

SubscribeStar: www.subscribestar.com/yannickilcher

Patreon: www.patreon.com/yannickilcher

Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq

Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2

Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m

Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n

Abstract:

While recent preference alignment algorithms for language models have demonstrated promising results, supervised fine-tuning (SFT) remains imperative for achieving successful convergence. In this paper, we study the crucial role of SFT within the context of preference alignment, emphasizing that a minor penalty for the disfavored generation style is sufficient for preference-aligned SFT. Building on this foundation, we introduce a straightforward and innovative reference model-free monolithic odds ratio preference optimization algorithm, ORPO, eliminating the necessity for an additional preference alignment phase. We demonstrate, both empirically and theoretically, that the odds ratio is a sensible choice for contrasting favored and disfavored styles during SFT across the diverse sizes from 125M to 7B. Specifically, fine-tuning Phi-2 (2.7B), Llama-2 (7B), and Mistral (7B) with ORPO on the UltraFeedback alone surpasses the performance of state-of-the-art language models with more than 7B and 13B parameters: achieving up to 12.20% on AlpacaEval2.0 (Figure 1), 66.19% on IFEval (instruction-level loose, Table 6), and 7.32 in MT-Bench (Figure 12). We release code and model checkpoints for Mistral-ORPO-α (7B) and Mistral-ORPO-β (7B).

Authors: Jiwoo Hong, Noah Lee, James Thorne

Links:

Homepage: ykilcher.com

Merch: ykilcher.com/merch

UA-cam: ua-cam.com/users/yannickilcher

Twitter: ykilcher

Discord: ykilcher.com/discord

LinkedIn: www.linkedin.com/in/ykilcher

If you want to support me, the best thing to do is to share out the content :)

If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this):

SubscribeStar: www.subscribestar.com/yannickilcher

Patreon: www.patreon.com/yannickilcher

Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq

Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2

Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m

Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n

Переглядів: 11 797

Відео

[ML News] Chips, Robots, and Models

Переглядів 21 тис.4 години тому

OUTLINE: 0:00 - Intro 0:19 - Our next-generation Meta Training and Inference Accelerator 01:39 - ALOHA Unleashed 03:10 - Apple Inks $50M Deal with Shutterstock for AI Training Data 04:28 - OpenAI Researchers, Including Ally of Sutskever, Fired for Alleged Leaking 05:01 - Adobe's Ethical Firefly AI was Trained on Midjourney Images 05:52 - Trudeau announces $2.4billion for AI-related investments ...

TransformerFAM: Feedback attention is working memory

Переглядів 29 тис.9 годин тому

Paper: arxiv.org/abs/2404.09173 Abstract: While Transformers have revolutionized deep learning, their quadratic attention complexity hinders their ability to process infinitely long inputs. We propose Feedback Attention Memory (FAM), a novel Transformer architecture that leverages a feedback loop to enable the network to attend to its own latent representations. This design fosters the emergenc...

[ML News] Devin exposed | NeurIPS track for high school students

Переглядів 37 тис.14 годин тому

OUTLINE: 0:00 - Intro 0:21 - Debunking Devin: "First AI Software Engineer" Upwork lie exposed! 07:24 - NeurIPS 2024 will have a track for papers from high schoolers. 13:29 - Opus can operate as a Turing machine. 13:47 - An AI-Powered, Self-Running Propaganda Machine for $105 14:27 - TechScape: How cheap, outsourced labour in Africa is shaping AI English 16:25 - Is ChatGPT Transforming Academics...

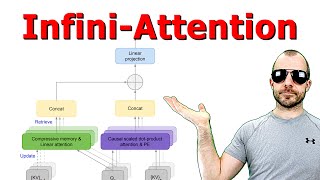

Leave No Context Behind: Efficient Infinite Context Transformers with Infini-attention

Переглядів 43 тис.19 годин тому

Google researchers achieve supposedly infinite context attention via compressive memory. Paper: arxiv.org/abs/2404.07143 Abstract: This work introduces an efficient method to scale Transformer-based Large Language Models (LLMs) to infinitely long inputs with bounded memory and computation. A key component in our proposed approach is a new attention technique dubbed Infini-attention. The Infini-...

[ML News] Llama 3 changes the game

Переглядів 43 тис.21 годину тому

Meta's Llama 3 is out. New model, new license, new opportunities. References: llama.meta.com/llama3/ ai.meta.com/blog/meta-llama-3/ github.com/meta-llama/llama3/blob/main/MODEL_CARD.md llama.meta.com/trust-and-safety/ ai.meta.com/research/publications/cyberseceval-2-a-wide-ranging-cybersecurity-evaluation-suite-for-large-language-models/ github.com/meta-llama/llama-recipes/tree/main/recipes/res...

Hugging Face got hacked

Переглядів 30 тис.14 днів тому

Links: Homepage: ykilcher.com Merch: ykilcher.com/merch UA-cam: ua-cam.com/users/yannickilcher Twitter: ykilcher Discord: ykilcher.com/discord LinkedIn: www.linkedin.com/in/ykilcher If you want to support me, the best thing to do is to share out the content :) If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this): Subscrib...

[ML News] Microsoft to spend 100 BILLION DOLLARS on supercomputer (& more industry news)

Переглядів 21 тис.14 днів тому

Some updates from industry in the Machine Learning world Links: Homepage: ykilcher.com Merch: ykilcher.com/merch UA-cam: ua-cam.com/users/yannickilcher Twitter: ykilcher Discord: ykilcher.com/discord LinkedIn: www.linkedin.com/in/ykilcher If you want to support me, the best thing to do is to share out the content :) If you want to support me financially (completely optional and volu...

[ML News] Jamba, CMD-R+, and other new models (yes, I know this is like a week behind 🙃)

Переглядів 25 тис.14 днів тому

A flurry of new models continues to appear. Links: Homepage: ykilcher.com Merch: ykilcher.com/merch UA-cam: ua-cam.com/users/yannickilcher Twitter: ykilcher Discord: ykilcher.com/discord LinkedIn: www.linkedin.com/in/ykilcher If you want to support me, the best thing to do is to share out the content :) If you want to support me financially (completely optional and voluntary, but a ...

Flow Matching for Generative Modeling (Paper Explained)

Переглядів 34 тис.21 день тому

Flow matching is a more general method than diffusion and serves as the basis for models like Stable Diffusion 3. Paper: arxiv.org/abs/2210.02747 Abstract: We introduce a new paradigm for generative modeling built on Continuous Normalizing Flows (CNFs), allowing us to train CNFs at unprecedented scale. Specifically, we present the notion of Flow Matching (FM), a simulation-free approach for tra...

Beyond A*: Better Planning with Transformers via Search Dynamics Bootstrapping (Searchformer)

Переглядів 32 тис.21 день тому

Paper: arxiv.org/abs/2402.14083 Abstract: While Transformers have enabled tremendous progress in various application settings, such architectures still lag behind traditional symbolic planners for solving complex decision making tasks. In this work, we demonstrate how to train Transformers to solve complex planning tasks and present Searchformer, a Transformer model that optimally solves previo...

[ML News] Grok-1 open-sourced | Nvidia GTC | OpenAI leaks model names | AI Act

Переглядів 33 тис.Місяць тому

OUTLINE: 0:00 - Intro 0:15 - XAI releases Grok-1 2:00 - Nvidia GTC 4:45 - Comment of the Week 5:35 - Brute-forcing OpenAI model names 7:30 - Inflection AI gets eaten by Microsoft 9:25 - EU AI Act moving forward 11:45 - Advances in Robotics 14:00 - India retracts controversial advisory 14:30 - OpenSora 15:20 - Improved Gemma fine-tuning 16:20 - Decoding encrypted LLM traffic 17:45 - Varia Refere...

[ML News] Devin AI Software Engineer | GPT-4.5-Turbo LEAKED | US Gov't Report: Total Extinction

Переглядів 51 тис.Місяць тому

Your weekly dose of ML News OUTLINE: 0:00 - Intro 0:15 - Devin: AI software engineer 5:50 - Mira Murati on Sora training data 6:50 - Inflection accused of copying Claude 9:00 - Tools & papers 16:30 - GPT-4.5-turbo mystery 17:30 - US government report: total extinction by AI 19:20 - Various other news References: www.cognition-labs.com/introducing-devin cognition_labs/status/17675487...

[ML News] Elon sues OpenAI | Mistral Large | More Gemini Drama

Переглядів 32 тис.Місяць тому

#mlnews #ainews #openai OUTLINE: 0:00 - Intro 0:20 - Elon sues OpenAI 14:00 - Mistral Large 16:40 - ML Espionage 18:30 - More Gemini Drama 24:00 - Copilot generates spicy images 26:55 - Gemma bugs 28:45 - Varia References: gist.github.com/yk/0c065cdc8e414738abfaae4f8e417e00 Thumbnail pictures: Wikipedia Links: Homepage: ykilcher.com Merch: ykilcher.com/merch UA-cam: ua-cam.com/users/yannickilch...

No, Anthropic's Claude 3 is NOT sentient

Переглядів 43 тис.Місяць тому

No, Anthropic's Claude 3 is NOT sentient

[ML News] Groq, Gemma, Sora, Gemini, and Air Canada's chatbot troubles

Переглядів 40 тис.2 місяці тому

[ML News] Groq, Gemma, Sora, Gemini, and Air Canada's chatbot troubles

V-JEPA: Revisiting Feature Prediction for Learning Visual Representations from Video (Explained)

Переглядів 37 тис.2 місяці тому

V-JEPA: Revisiting Feature Prediction for Learning Visual Representations from Video (Explained)

What a day in AI! (Sora, Gemini 1.5, V-JEPA, and lots of news)

Переглядів 32 тис.2 місяці тому

What a day in AI! (Sora, Gemini 1.5, V-JEPA, and lots of news)

Lumiere: A Space-Time Diffusion Model for Video Generation (Paper Explained)

Переглядів 27 тис.2 місяці тому

Lumiere: A Space-Time Diffusion Model for Video Generation (Paper Explained)

AlphaGeometry: Solving olympiad geometry without human demonstrations (Paper Explained)

Переглядів 33 тис.3 місяці тому

AlphaGeometry: Solving olympiad geometry without human demonstrations (Paper Explained)

Mixtral of Experts (Paper Explained)

Переглядів 53 тис.3 місяці тому

Mixtral of Experts (Paper Explained)

LLaMA Pro: Progressive LLaMA with Block Expansion (Paper Explained)

Переглядів 34 тис.3 місяці тому

LLaMA Pro: Progressive LLaMA with Block Expansion (Paper Explained)

I created an AI-powered Social Network

Переглядів 25 тис.4 місяці тому

I created an AI-powered Social Network

NeurIPS 2023 Poster Session 4 (Thursday Morning)

Переглядів 12 тис.4 місяці тому

NeurIPS 2023 Poster Session 4 (Thursday Morning)

Mamba: Linear-Time Sequence Modeling with Selective State Spaces (Paper Explained)

Переглядів 120 тис.4 місяці тому

Mamba: Linear-Time Sequence Modeling with Selective State Spaces (Paper Explained)

Another Hit Piece on Open-Source AI

Переглядів 27 тис.4 місяці тому

Another Hit Piece on Open-Source AI

NeurIPS 2023 Poster Session 3 (Wednesday Evening)

Переглядів 8 тис.4 місяці тому

NeurIPS 2023 Poster Session 3 (Wednesday Evening)

![[ML News] Chips, Robots, and Models](http://i.ytimg.com/vi/tRavLU8Ih4A/mqdefault.jpg)

![[ML News] Chips, Robots, and Models](/img/tr.png)

![[ML News] Devin exposed | NeurIPS track for high school students](http://i.ytimg.com/vi/GtveKYXYo_0/mqdefault.jpg)

![[ML News] Llama 3 changes the game](http://i.ytimg.com/vi/kzB23CoZG30/mqdefault.jpg)

![[ML News] Microsoft to spend 100 BILLION DOLLARS on supercomputer (& more industry news)](http://i.ytimg.com/vi/DRwwjifoVZU/mqdefault.jpg)

![[ML News] Jamba, CMD-R+, and other new models (yes, I know this is like a week behind 🙃)](/img/n.gif)

There is an important element of chronology that seems to be missing in their strategy. The fact that they intentionally remove repeated info seems to drive that home. As if things happening more than once isn't relevant... maybe I'm not understanding but this paper seems way off.

"We find a way to make the memory of RNN larger and 2D". That is what I think, and maybe I am wrong.

I very like more technical content from you. I usually read tech news in telegram and your NL New are greats, but very ordinal and simple. So such paper explanations are kind of impact to the DS community, such videos grands new ideas and increase understanding of the field for those, who tried to dive in the deep. Of course it less popular due to complexity of material for audience, but much more interesting. So thank you for such format.

Oh, and talking about ideas I don' t have the money to test (see my other comment here for another crazy idea); could someone please try to train a model with training-time random ablation of both parameter count and quantization level, all on the same training, so that the final model from the get go is already optimized to work at whatever ablation level of parameter count and quantization derived versions end up having, essentially training not only for the outputs but also for sorting it''s own parameters and bits by how important they are?

Could someone with money to burn on dataacenter waste heat please test if it would work to have a model that is trained to re-write a certain fraction of it's own weights at inference time (half, a third, 10 percent etc); sorta like if instead of predicting tokens directly, it first predicts some of it's own weights based on the inputs; possibly also comparing if it's better to keep just certain layers unfrozen, just certain neurons unfrozen, let it decide what to freeze at training time, or even not freeze anything in specific and just let it "predict". not only the weights values but also which neurons to change as well Or some variation of this idea that makes a little more sense if what I described has some details that don't really match how the pieces fit together or whatever.

Where does Yw and Yl come from. Is it from the training dataset or the LLM that is being trained generates these and are labelled by humans or reward models as W and L?

I think it should be called Continous Attention is All you Need.

3:42 “Devin swoops around, does some stuff, introduces some bugs, then fixes those bugs.” Plenty of devs fit this description.

5:59 $2.4 billion for some ai programm, $100 million for house problems, I guess what are the priorities of the governemnt

The last fabric manipulation demo I saw maybe last year looked pretty similar... but the video was sped up 64x. The Google demo was actual speed, tremendously better.

There seems to be a conceptual problem, where are the preferences coming from given that they are expressed on multiple responses to the same prompt? Let's suppose we wish to fine-tune a foundation model for chat, we would not have the preferences before having done SFT and gathered some responses on the chat template format based prompt, that would force us to do SFT first and then SFT+ODDS_RATIO loss. Doable but surely not a single pass approach.

6:23 "There is enough research to show that once you are capable at one language, you only need quite little data on another language to transfer that knowledge back and forth" Does anyone give me related papers to this argument? I am interested in cross-lingual transfer in language models.

Thx again yan! 🎉

"Specifically, 1 - p(y|x) in the denominators amplifies the gradients when the corresponding side of the likelihood p(y|x) is low". I think that (1 - p(y|x)) have two different meanings here: it can be the result of differentiation by coincidence and also the "corresponding side" of the likelihood, i.e., 1 - p(y|x). So, when it says the "corresponding side" of p(y|x) is low, it means that 1 - p(y|x) is low.

just casually calling out tensorflow lol

Keep them comin

I liked the self deprecation at 32:00 haha

"Has anyone heard of Tensorflow lately?" Google engineers who is still using TF: crying behind the scene.

I found this more endearing than netflix 🥹❤️.

I just wish there were better options for DIY local/edge inference. Basically nothing since the Google Coral Edge TPU 5+ years ago.

makes me think of PPO

What's going on, is it a yannic bonanza time of the year! Loving these addicting videos

Glad you're back to technical content this time. Any AI UA-camr can give us latest AI news, but you're just about the only one that can give technical insight into the stories.

Funny that I thought of the same idea right after watching your last review on infini attention lol

The main loss function (7) looks like it can be meaningfully simplified with school-level math. Lor = -log(sigm( log ( odds(y_w|x) / odds(y_l|x)))), where sigm(a) = 1/(1 + exp(-a)) = exp(a) / (1 + exp(a)) Let's assume that both odds(y_w|x) and odds(y_l|x) are positive (because softmax) By plugging in the sigmoid, we get Lor = - log (exp(log(odds(y_w|x) / odds(y_l|x) )) / (1 + exp(log(odds(y_w|x) / odds(y_l|x)))) ) Note that exp(log(odds(y_w|x) / odds(y_l|x)) = odds(y_w|x) / odds(y_l|x). We use this to simplify: Lor = - log( [odds(y_w|x) / odds(y_l|x)] / (1 + odds(y_w|x) / odds(y_l|x)) ) Finally, multiply both numerator and denominator by odds(y_l|x) to get Lor = - log(odds(y_w|x) / (odds(y_w|x) + odds(y_l|x)) ) Intuitively, this is the negative log-probability of (the odds of good response) / (odds of good response + odds of bad response ). If you minimize the average loss over multiple texts, it's the same as maximizing the odds that the model chooses winning response in every pair (of winning+losing responses).

Good job! I suppose you mean `odds(y_l|x)` instead of `odds(y_l)` in the final equation.

@@peterszilvasi752 thanks! good catch :) /* fixed the previous comment */

very cool! thank you for this

6 videos in 7 days, I'm having a holiday and this is such a perfect-timing treat.

I personally think the memory part is kind of a "semi gradient" thing, similar to the concept we used in DQN, since it is going to store context over very long text, if the memory part still holds gradients it will get harder and slower to train as the text goes longer. So, once context is accumulated into memory, regard it as constant vector to serve the down streaming calculation, which is scalable. Correct me if I am wrong.

*Abstract (For Physicists)* Imagine a system where you're trying to optimize a complex energy landscape based on incomplete information. You have some examples of desirable low-energy states (winning responses) and undesirable high-energy states (losing responses), but no explicit energy function. This is analogous to aligning a language model with human preferences. Current methods often involve a two-step process: first, shaping the energy landscape through a coarse-grained process like simulated annealing (SFT), then refining it using the limited preference data (RLHF). We propose a novel method, ORPO, that combines these steps by introducing an auxiliary potential term based on the relative likelihood of the winning and losing states. This additional potential guides the system towards the desired regions of the energy landscape during the initial shaping process itself, resulting in a more efficient and effective optimization. ORPO demonstrates superior performance compared to existing methods, achieving lower "energies" (better alignment) with fewer computational resources. This framework provides a new perspective on preference-based optimization problems and has potential applications in various fields beyond language modeling. *The Role of Supervised Fine-tuning (SFT) in Alignment* - 0:46: Alignment aims to make language models better follow instructions and produce outputs that align with human preferences. This typically involves a multi-step process, including SFT and subsequent preference optimization (e.g., RLHF). - 2:25: This paper questions the need for this multi-step process and investigates the role of SFT in alignment. - 6:38: The paper proposes using preference data (winning/losing responses) during the SFT stage to achieve alignment in a single step. - 10:29: The standard SFT loss function (log likelihood) aims to maximize the likelihood of generating the correct tokens given a prompt. However, it doesn't distinguish between pushing down the likelihood of incorrect tokens in general and specifically pushing down the likelihood of undesired outputs. - 13:25: Due to this, SFT can inadvertently increase the likelihood of generating undesired outputs, highlighting the need for an additional mechanism to differentiate between acceptable and unacceptable responses. *Odds Ratio Preference Optimization (ORPO)* - 17:48: ORPO introduces an auxiliary loss term based on the odds ratio between the likelihood of generating the winning and losing outputs. This encourages the model to favor generating the desired responses while penalizing the undesired ones. - 18:19: The odds ratio provides a more balanced and numerically stable way of contrasting preferences compared to directly using the probability ratio. - 21:53: Analysis of ORPO's gradient reveals two key components: a penalty term that activates when the model is not aligned and a weighted contrast term that pushes the model towards the winning response and away from the losing response. *Results and Analysis* - 28:21: ORPO consistently improves the performance of various language models on alignment benchmarks compared to SFT and RLHF. - 28:55: ORPO models even outperform larger models trained with other alignment methods, demonstrating its efficiency and effectiveness. - 32:38: While ORPO excels at aligning model outputs with preferences, it may slightly reduce the model's overall fluency and diversity compared to methods like DPO. *Discussion* - 30:05: The paper discusses why the odds ratio is preferred over the probability ratio in ORPO, highlighting its numerical stability and effectiveness in the context of SFT. - 32:08: ORPO's computational efficiency is also emphasized due to the elimination of the reference model, leading to reduced memory and computational requirements. *Conclusion* ORPO offers a promising approach for aligning language models with human preferences in a single-step, efficient manner. Its effectiveness across various model sizes and datasets suggests its potential as a valuable tool for developing safer and more aligned language models. i used gemini 1.5 pro

10:33 LOL

Thank you Mr Klicher for delving into the paper, ORPO; Monolithic Preference Optimization without Reference Model

Thanks for explaining basic terms along with the more complex stuff, for dilettantes like myself. Cheers.

So, the typical UI is optimized for non-technical human users for accesibility, since they are the expect end-users. We have UI for technical people with a mix of performance and usability How would a UI designed for models/machines and optimized for performance look like? How much performant could a model trained on it become? It's a bit of a loop thinking about an UI optimized for a model and a model optimized for an UI. At the end the model could become a more powerful UI getting closer to both the user through natural language and conversation and also closer to the machine doing the actual work Basically another layer of high-low language

The comparison in the end between OR and PR should also discuss the influence of the log sigmoid, or? And, more importantly, how the gradients for the winning and loosing output actually would look like with these simulated pars... It feels a bit handweavy why the logsigmoid of the OR should be the target ...

why hat, indeed

great! now apply ORPO to a reward model and round we go!

I feel like AI models have gotten more stale and same-y ever since RLHF became the norm. Playing around with GPT-3 was wild times. Hopefully alignment moves in a direction with more diverse ranges of responses in the future, and less censorship in domains where it's not needed.

LLMs are what Machine Learning has always been: input output. Quality data makes the cake…. no matter how many fancy mixers you bring to the table.

26:30 that 'really?' and the following struggle with basic math is WAAAAY to relatable

0:52 : I wish we had a different term for this other than “alignment”

"Preference tuning" is used to describe it pretty often

@@TheRyulord thanks!

Thank you for being awesome Yannic, I send people from the classes that I "TA" for to you because you're reliably strong with your analysis.

Would be interesting to see how it compares to KTO. Would guess that KTO outperforms and is easier to implament as you dont need pairs of inputs.

Nice I was waiting for this after you mentioned ORPO in ML News :))

Can you please make a video on AI Report 2024

I don't even know what the title of this video means 😵💫. But I'm going to watch anyway.

Great to see research from my homeland of South Korea represented!

Woo allegence to tribes!!... .. ..

do you know Seoul?

There is only one korea

Wow good timing to go on youtube

You are on fire!

Nice!

Keep it up! Forgot about this series but like it!

16:04 gpt2-chatbot is a top performing not (yet) public new model. A recent (30.04) X post from Sam Altman hints at exactly this. It is currently being tested in the Hugging Face Chatbot Arena. It appears to be a GPT-2 model trained using OpenAI's industry-leading training methods.

Did you hear that George Hotz hacked the RTX 4090 driver to enable P2P? I'm pretty interested of the implications this has for running local LLMs, really fascinating stuff. It was on Hacker News' front page a few weeks ago!